The data journey

at Daltix.

We take you on a data journey so that you can glean insights for more

strategic decisions, tactical optimisations and operational executions.

What we do for your insights.

Data collection

Daily, qualitative data where possible.

Multiple stores and regions if possible.

Field data when necessary.

Data structure

Selection and set-up of technologies.

Maintenance and future-proofing technology.

Product matching (AI & ML).

Data cleaning & quality

Error detection & back-processing.

Anomaly detection.

Data collection.

We collect information on over 8 million products every day. In order to collect this amount of data with quality as the end result in mind, our collection process is rigorous, in order for you to have reliable FMCG data at hand.

We can rely on a vastly experienced data collection and data augmentation team.

Our process

- Ability to handle anti-scraping technologies

- Reprocess data from temporarily available webshops

- Eliminate human error through input validation

- Scrape data with location awareness

- Conduct completeness checks

- Priority to solid data lineage set up

- Process raw data from field takes

Our set-up

- Data architecture is cloud based

- Best of breed data warehouse vendors, e.g. Snowflake

- Native scalability

- Enterprise features like secure data sharing and access control

- Data lake and data mart approach resulting in unlimited back-data

- Assigning a unique identifier to each product in the data

- Data processing pipelines can autoscale

- Leveraging NLP to detect language specifics

Data structure.

Structuring data requires a deep understanding of technologies for set-up, selection and maintenance. We ensure our technology selection is future-proof and supports innovation.

You need a certain skillset and knowledge pool to structure data. It's not everyone's core business. But it is our core business.

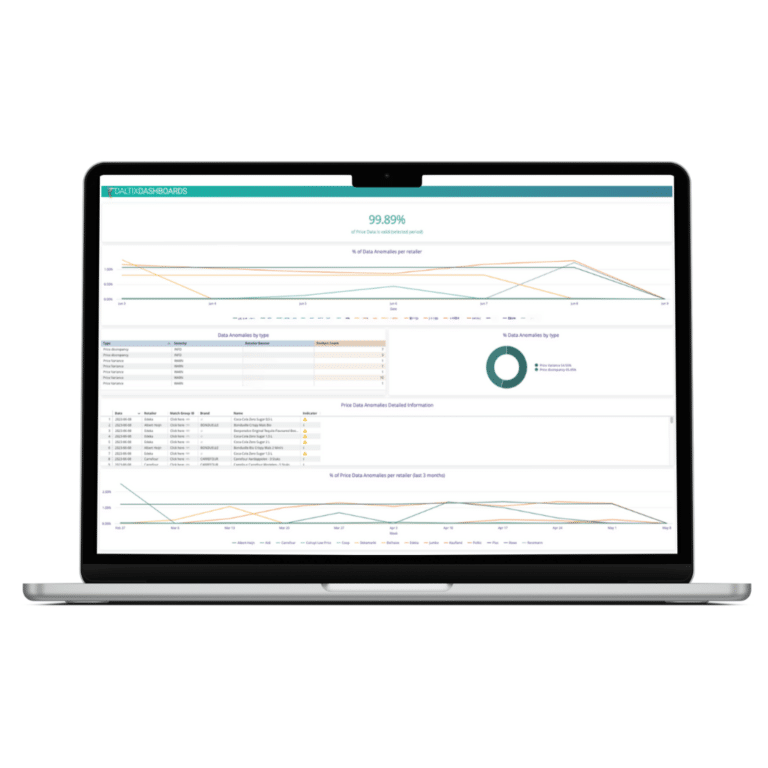

Data cleaning & quality.

Processing over 35 million data points daily makes datasets very complete to gain insights from. Our data goes through extensive cleaning and quality checks so that you have reliable FMCG data for premium analyses.

We have a robust process of logging data issues, mitigating them and putting preventive measures in place for the future.

Our process

- Error detection indicates missing, duplicate or incorrect fields

- Eliminating duplicate products through uniqueness restriction

- Data goes through normalisation

- Applying common open-source tooling to standardise and augment incoming data

- Anomaly detection using quality metadata indicators

- Several reiterations of back-processing because of derived values and calculations

What you do for your insights.

Data exchange via sFTP makes access easy, fast and secure.

Data storage

Fast and easy data access.

Scalable & shareable data warehousing.

Data integration

Only as strong as your identifier(s).

EAN and/or article number based.

Data analysis

Performant, “plug and play” data.

Full flexibility; live connections or extracts on fixed frequency.