Daltix Data Architecture:

Data extraction

11 October 2022

Daltix Data Architecture: Data extraction

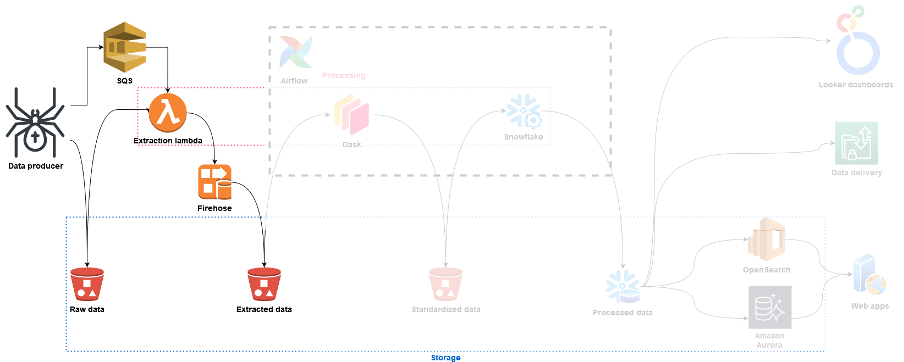

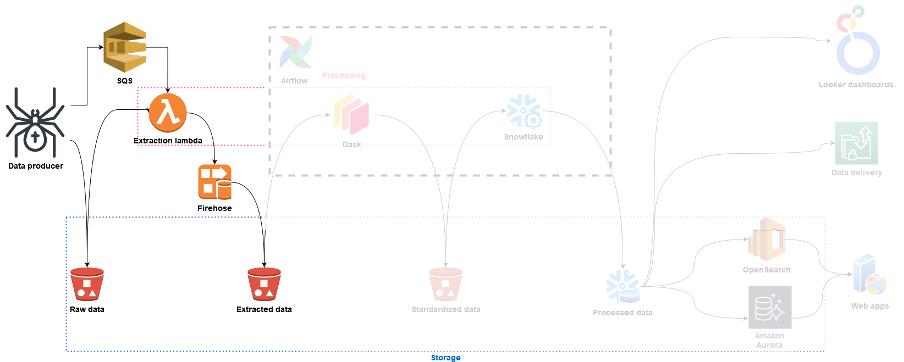

In the previous post in our data architecture series (click link to take you to the post), we gave a high-level overview of what our data architecture looks like. In the next few, we’ll zoom in on the different parts in that architecture to give more details around how everything’s set up, and why. Today we’ll discuss what happens to the data when it first arrives in our data lake: the raw and extracted layers.

The reason for the ‘extracted layer’.

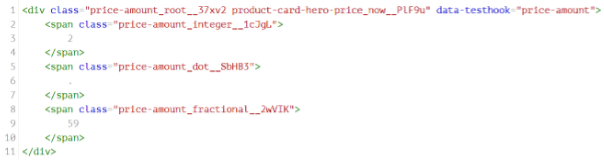

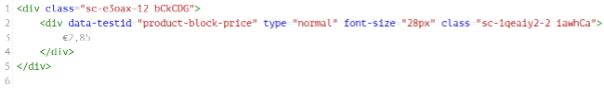

When we download our data from the internet as HTML or JSON files, the full HTML page or JSON document is saved without alterations to the raw data bucket in S3. If you’ve ever taken a look at the source of a web page, you’ll know that the actual information portrayed on the website is encapsulated in layers and layers of HTML tags that do not necessarily carry useful information. For example, in the case of supermarket B above, the useful information is really just the text ‘€2.85’; all the rest is irrelevant to our use case of product and pricing data. The first step in our data processing pipeline is to sieve out these useful snippets of information from the rest of the document, so we drastically reduce the amount of data to be processed in the rest of the pipeline.

Aside from reducing data size, the extracted layer serves one other purpose. If we compare the two code snippets above, it’s easy to see that they contain similar information – ‘2.59’ and ‘€2.85’ – but the structure in which that information appears is drastically different. Even worse, since companies regularly update their websites, there is no guarantee that a store’s website will have the same structure tomorrow as it does today. Clearly, some logic is needed to determine where which data sits before we can standardise the data. To simplify the standardisation process, we eliminate this variance here by assigning standardised keys to the data at this stage. For example, for the two snippets above, the data in the extracted layer would consist of 2 JSON documents, with as contents:

This way the code that standardises this data into the next stage doesn’t have to worry about translating column names, and only has to process data that is actually relevant to the standardisation exercise. Note that in all of these examples we’ve used HTML, but the same goes for JSON: structures between websites are very different, and often the documents contain a lot of information about displaying the data correctly; this is data we’re usually not interested in.

Of course, all of this could also have been implemented in the code that crawls websites, so why bother having a dedicated processing step for this? As we mentioned in the previous post, we thought that the most interesting thing about online retail was the pricing, but we soon learned that wasn’t necessarily true; a lot of value is also stored in other product data (anything ranging from its name to where it was produced). We expect that there will come a time when we realise we’ve missed out another piece of data from our input that would actually be good to have.

By storing the raw documents we download, we ensure that we can always go back and get those bits. It also ensures that if a website changes slightly, we don’t have to download all the web pages again, we can just re-process the ones we’ve already downloaded.

Extracting data

So how does it actually work? Our data producers, our crawlers – which we’ll talk more about in another series of posts – drop the documents they download into S3. Right after that, they publish the URI of the file they’ve just written to an SQS queue that holds all the URIs to be processed. Whatever gets published into this queue is then consumed by an AWS Lambda function. Having a queue here rather than a direct link between S3 and Lambda through for example EventBridge means that if we ever need to re-process a bunch of files for whatever reason, we can just push the list of URIs we want redone to the queue, and the pipeline picks up automatically from there.

As soon as a Lambda function gets fired, it reads the file at the URI it was called with, processes it and then writes the result as JSON to a Kinesis Firehose stream. We use Lambda functions here because they scale very well, and because they’re easy to implement (they can be pure python code). They do have a drawback; because we process so many documents, the huge number of invocations does start incurring a considerable AWS cost. To mitigate this, we’re in the process of containerising these lambda workloads and moving them to dedicated machines on our Kubernetes cluster.

Finally, our Kinesis Firehose stream accepts the JSON documents it receives from all these Lambda processes and bundles them, writing a batch every 128MB or 5 minutes, whichever comes first. This batching ensures that the data we process further downstream does not come in millions of tiny files: processing less files that are bigger tends to be more efficient since it involves less I/O overhead. Since Firehose is a managed service, we don’t have to worry about scaling this stream or coding the batching behaviour, and we can focus on the actual data processing. Finally, at this point the data is available on S3 again in a more structured format, ready to be picked up by the standardisation suite.

A note on storage

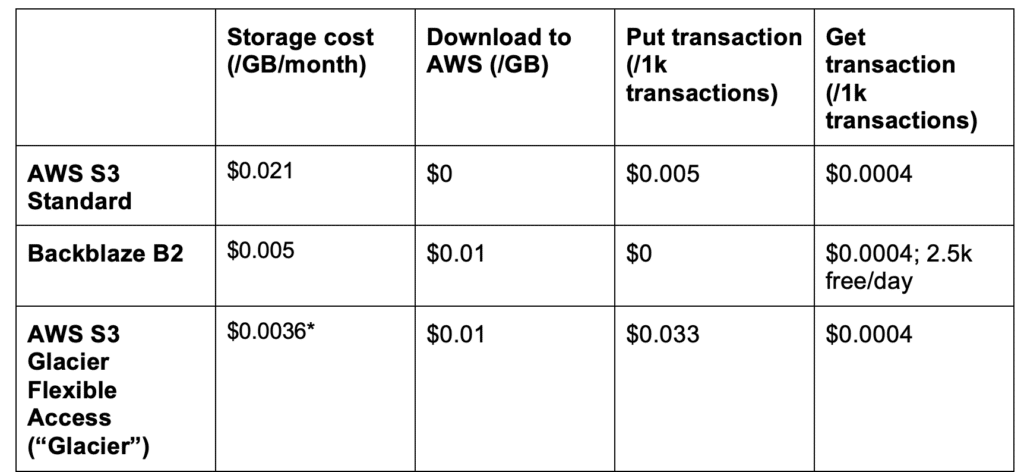

While how we process data is crucially important, how we store it is equally so. Data in the two stages we discussed today is only really kept for re-processing and error recovery: we don’t expect analysts to routinely delve into the raw HTML pages as they were downloaded. Retrieval speed is therefore not the main decision driver in where or how we store this data. On the other hand, keep in mind that we download millions of web pages per day – as you can imagine storing all of that can get very expensive. That is why we chose S3 here: it offers cheap storage with reasonable retrieval speeds for single-use processing.

The amount of data we store always keeps growing though, and the probability of us needing to access this data quickly reduces over time. That is why we looked into alternatives for long-term, infrequent access storage that is even cheaper than S3. We compared S3 with Glacier and Backblaze B2, and have summarised some of the key differences in the table above. Keeping in mind that we don’t expect to download data very often from this long-term storage, it’s clear B2 is much cheaper than S3. Glacier would have been a serious contender, but for the fact that it comes with a minimum billable object size that happens to be about 2.5 times our average file size; this translates roughly in a real storage cost of $0.009 for us – much more expensive than B2. On top of that, B2 does not charge for upload transactions (while Glacier does), and they also offer an S3-like API so we can still access data on B2 as if it were on S3 when we need to. For all of these reasons we decided to offload our archive data to Backblaze B2.

That brings us to the end of today’s post. Now that we’ve explored how the data gets to the extracted stage and what it looks like once there, in the next post we’ll discuss how we go about standardising it and preparing it for analytics.

Want to know what happens with our data next? Check out how we Standarise and Shape our Data!

- Share this article

Related resources.

Daltix introduces it’s Data Quality Indicators to ensure data transparency

If your data provider doesn’t give you a clear outline of how they test their own data for quality, you should get suspicious. In order to deliver data you can trust, we’ve developed the Daltix Data Quality Indicators. Read about what our DQIs are and why it’s good for your data here!

Download the Daltix Summary & Data Span Matrix

As we are expanding across Europe, here is a downloadable matrix on what data and features Daltix offers as well as what countries we collect in and for what retailers.

Daltix Data Architecture: Data Access

In the final blog post of the data architecture series, we’ll take a look at how Daltix can provide everyone who requires their data access in a way that suits them. To answer that, we’ll examine our two data setups and explain our experiences and how they fit into our data architecture.