Daltix Data Architecture: Data Access

26 October 2022

If you missed the three previous posts in the series, here are links to the last three:

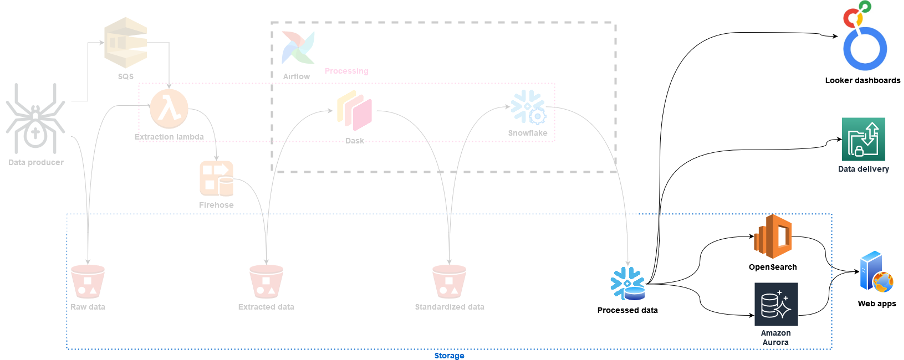

So far in this Data Architecture series, we’ve explained how our data gets transformed from its raw format (HTML pages or JSON documents dumped on S3) to a queryable structured data set available to analysts in Snowflake. In this post we’ll discuss the final piece of the puzzle – and often the hardest one: how do we ensure that everyone who needs to access our data, can do so in a way that fits their use case? To answer this, we’ll first take a look at two popular data setups: the data lake and the data warehouse. We’ll discuss our experiences with each, and how they fit in our data architecture.

On lakes and warehouses

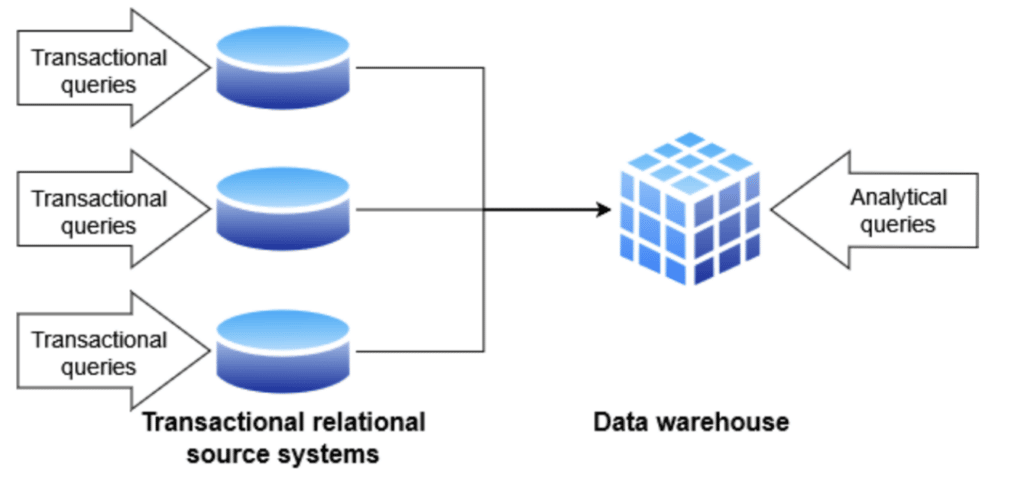

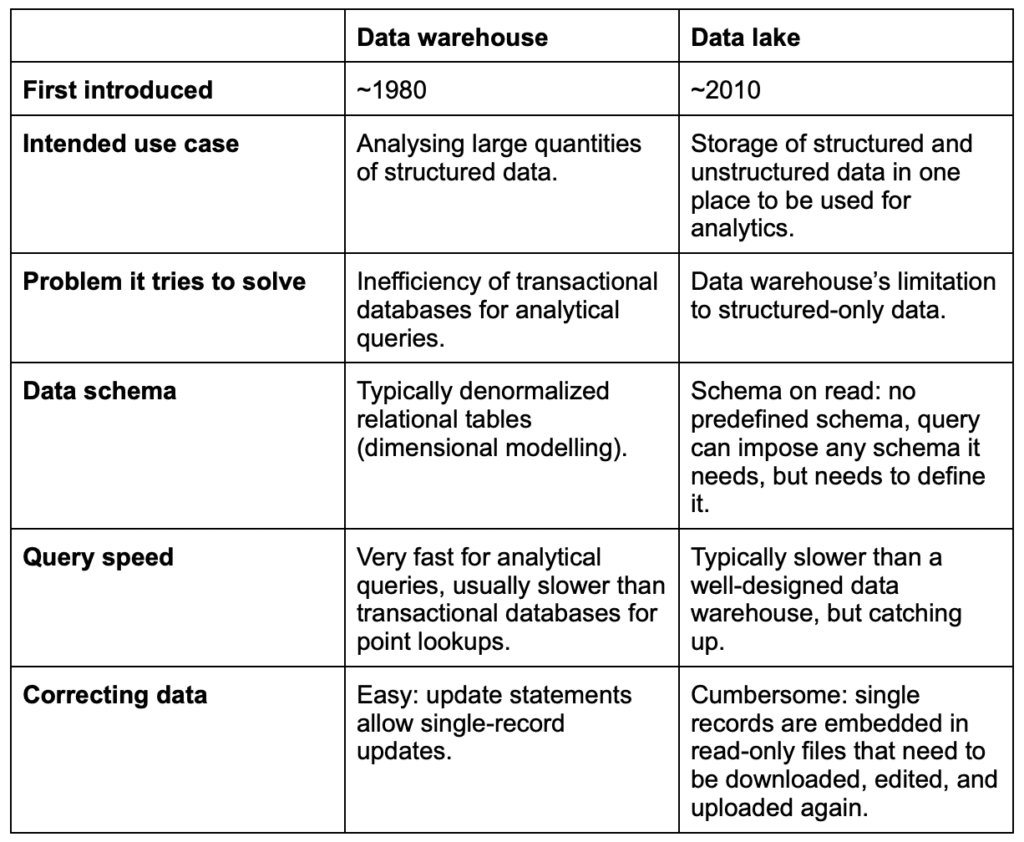

Data lakes and warehouses are two ways of managing data and getting value out of it, but they serve different use cases. The older of the two, the data warehouse, came to the fore back when transactional relational databases made up the vast majority of data systems in companies. As data analysis became more prevalent, people realised that the analytical queries they were running were very different from the typical transactional query: instead of looking up a single row and updating a value, you were now aggregating huge data sets and calculating metrics over them.

Transactional systems weren’t built for this type of queries, and neither was the often heavily normalised structure that data was stored in. This is where data warehouses came in: they were created to facilitate aggregations and occasional single-row lookups, rather than many random access line updates. Along with the technology to allow this, new ways of modelling data to make analytical queries faster to run and easier to write were also adopted, moving away from the normalised structures of transactional systems.

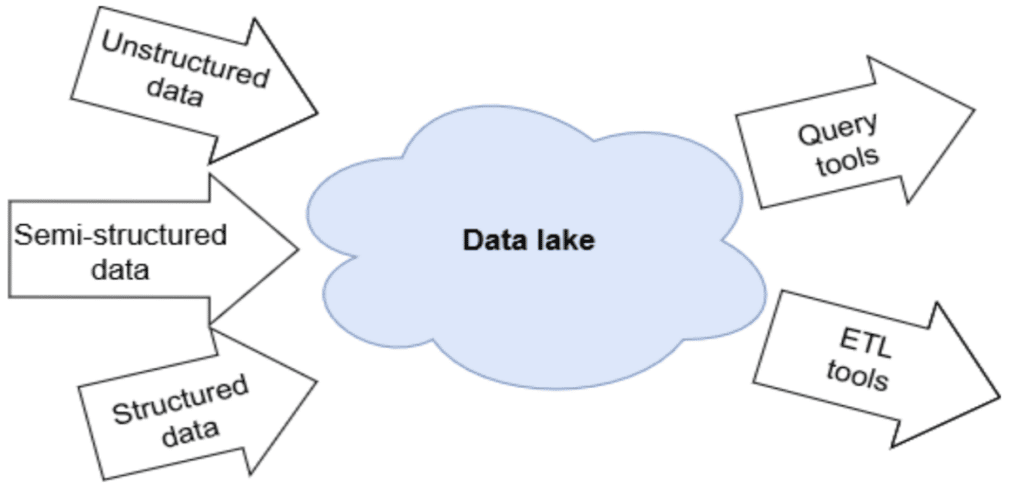

While data warehouses allow much more efficient analytical processing, they are still mostly relational systems. This means that, by and large, data stored must follow a certain format. There are ways to mitigate this: many warehouse platforms allow you to store free text or formats like JSON and XML in columns, but often these capabilities are limited and queries on them are not as performant on other columns. Often, fitting in data that did not adhere to a pre-defined, fixed schema meant either rewriting your schema (and transforming all your data already in it), or just not including that data at all. With unstructured data, e.g. images, video, IoT data etc., being seen more and more as valuable, this posed a challenge. Enter the data lake.

At its core, the data lake seeks to allow storing all your data, structured and unstructured, in one place cheaply, and to let you access it there directly. At first, it was really a storage solution: queries directly on the data lake were slow, and not expected to rival the speed of data warehouse queries. ETL pipelines read their data from the data lake into a processing engine, and stored it in data warehouses for routine analysis, but exploratory analysis could happen directly on data in the lake. More recently, tools have become available that improve data lake query performance. Some, like Databricks with Delta Lake, argue that this query performance has now caught up with data warehouse performance, and there should no longer be a distinction between data lake and warehouse, merging the two into the data lakehouse.

Since data lakes are meant primarily for storing data, they often take a hands-off approach to correcting data in it. It is often a cumbersome task: downloading a file, finding the line that needs updating, updating it, then uploading the entire file that contained it again to replace the original. For some use cases correcting data is not really an issue: when you’re collecting IoT sensor data for example, anomalies and wrong readings are expected, and consumers of this type of data typically handle this already. For other use cases, like ours, having wrong data in our lake means there is a risk we’ll someday sell that data to our customers. The data warehouse handles this more gracefully: you can usually update single records easily with an update command. Again, Delta Lake blurs the lines between the two technologies by allowing easy record-level updates in your lake directly.

Our data lake and warehouse

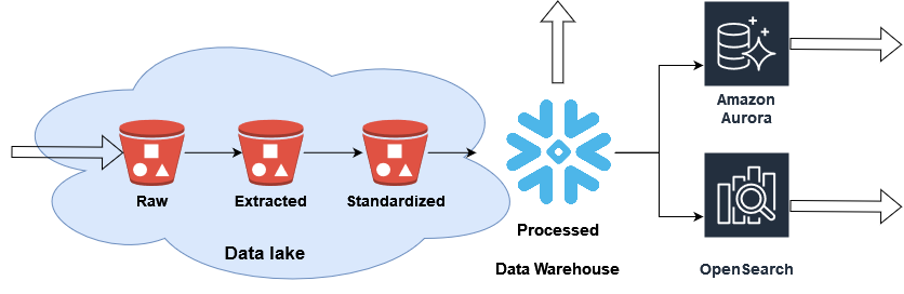

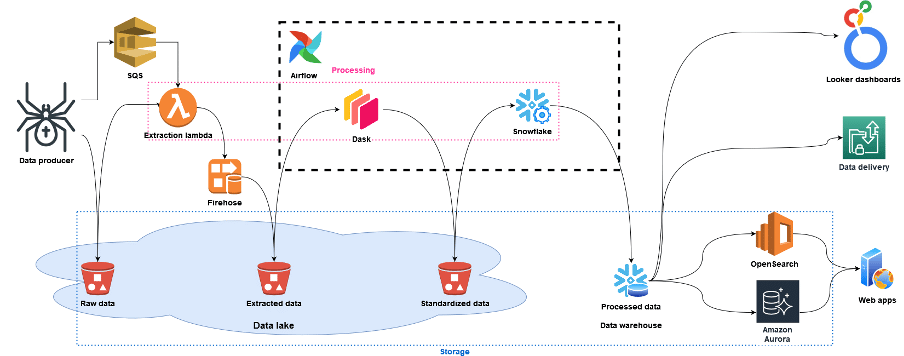

We introduced a data lake – comprising the raw, extracted, and standardised layers we discussed in previous posts – into our architecture exactly for the reason that it was originally developed: to give us more freedom in storing data in different formats, including HTML and JSON. This meant we no longer had to try and fit data to a schema that was pre-defined. Running batch transformation jobs (using Dask) that read their input from the lake, and wrote back to it, also worked marvellously.

Before long though, we found that this schema-on-read approach was putting a big burden on our data analysts. It often meant they were required to write queries to generate intermediate tables, rather than having the data stored in the required format ready for use. Also things like updating data for reporting (e.g. to correct a previous incorrect value) resulted in complex queries that needed to be written. In a way we had made our data very accessible to data engineers, but not really for our analytics teams who make the data go the last mile to the customer.

This was exactly the scenario that data warehouses excelled in though, so we added Snowflake to our infrastructure to transform and store our ready-to-use data. This meant that we could easily set up ETL pipelines written in SQL to transform the data into tables that were friendly to use for our analysts. This gain in efficiency for analysis was well worth the higher price for storing data in Snowflake rather than S3.

Bespoke access layer

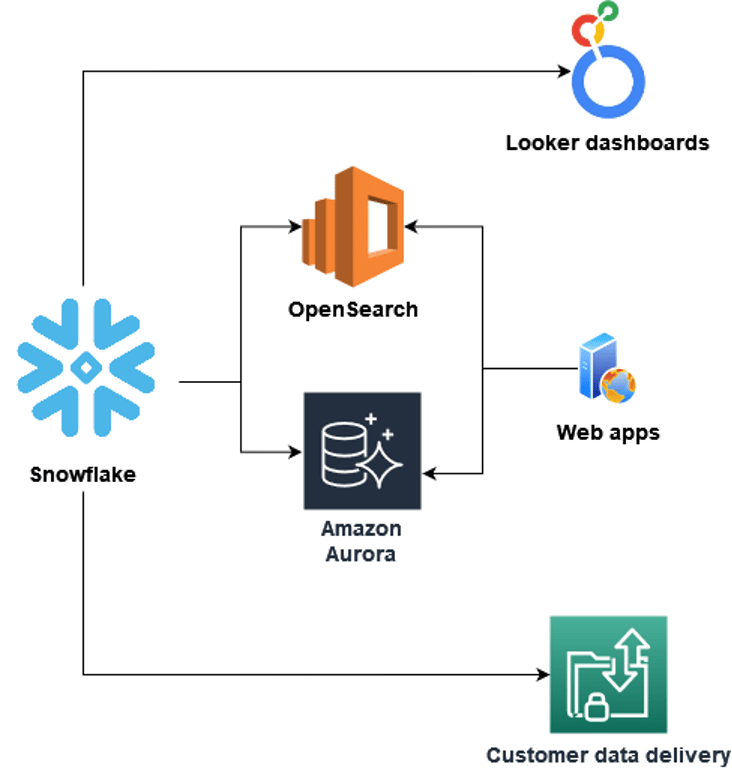

Once the data is in Snowflake in its final format, our standardised customer dashboards, written in Looker, read it directly from there. When we ship data to the customer, this also happens by extracting data directly from Snowflake to send on to them. However, we found that for some use cases that go beyond analytics, Snowflake did not provide the performance we needed. For these cases we’ve introduced a final stage in our data architecture: the bespoke access layer.

For our web applications such as our product matching tool we make a lot of single data point lookups, and we found that Snowflake struggled to retrieve these with low enough latency to not slow down the UI: while a second is not long to wait while doing some data analysis, it is very long when you’re waiting for your live dashboard to respond! To solve this, we introduced an AWS Aurora instance. Snowflake still serves up the necessary data here, and the subset of data required is merely replicated to AWS Aurora; the web application then reads it from there.

Our web application also includes free-text search boxes. Again, we found that Snowflake was not the best tool for the job here, but OpenSearch gave us the best performance for this type of queries, so we also replicate the data we need for this from Snowflake to OpenSearch.

That brings us to the end of the last post in this series where we’ve traced our data flow from ingestion into the data lake, over transformation through AWS Lambdas, Dask, and Snowflake, to presentation in the data warehouse in Snowflake and the bespoke access layer on Amazon Aurora and Opensearch. As is probably clear from this architecture, we strongly believe in using the right tool for the job, not tailoring the job to the tools you have. The combination of a data lake with a data warehouse allows us to keep huge amounts of data without limiting either the performance of the queries we run today, or which queries we can run in the future.

This architecture allows us to provide our customers with the data they need at a quality they’ve come to expect. In the world of data though, things are always moving, and we’re always considering new technologies or best practices to solve challenges we still have (like making our ETLs more testable and making sure our data lake also contains no incorrect values). It’s a journey, and we’re always welcoming talent to join us on it!

- Share this article

Related resources.

Daltix introduces it’s Data Quality Indicators to ensure data transparency

If your data provider doesn’t give you a clear outline of how they test their own data for quality, you should get suspicious. In order to deliver data you can trust, we’ve developed the Daltix Data Quality Indicators. Read about what our DQIs are and why it’s good for your data here!

Download the Daltix Summary & Data Span Matrix

As we are expanding across Europe, here is a downloadable matrix on what data and features Daltix offers as well as what countries we collect in and for what retailers.

Daltix Data Architecture: Data Access

In the final blog post of the data architecture series, we’ll take a look at how Daltix can provide everyone who requires their data access in a way that suits them. To answer that, we’ll examine our two data setups and explain our experiences and how they fit into our data architecture.